Kubernetes 系列1

Kubernetes 系列1

1. Kubernetes Introduction

Origin: Google developed an internal system called Borg (and later a new system called Omega), that helped both application developers and system administrators manage those thousands of applications and services.

Definition: Kubernetes is a software system that allows you to easily deploy and manage containerized applications on top of it. Kubernetes abstracts away the hardware infrastructure and exposes your whole datacenter as a single enormous computational resource. It allows you to deploy and run your software components without having to know about the actual servers underneath.

1.1 Docker's relation with Kubernetes

Q: How Does Kubernetes related to Docker? * Docker is a platform and tool for building, distributing, and running Docker containers. It offers its own native clustering tool that can be used to orchestrate and schedule containers on machine clusters.

- Kubernetes is a container orchestration system for Docker containers that is more extensive than Docker Swarm and is meant to coordinate clusters of nodes at scale in production in an efficient manner.

Q: Can you use Kubernetes without Docker?

Docker is the most common container runtime used in a Kubernetes Pod, but Kubernetes support other container runtimes as well.

As Kubernetes is a container orchestrator, it needs a container runtime in order to orchestrate. Kubernetes is most commonly used with Docker, but it can also be used with any container runtime. RunC, cri-o, containerd are other container runtimes that you can deploy with Kubernetes.

CNCF Cloud Native Interactive Landscape

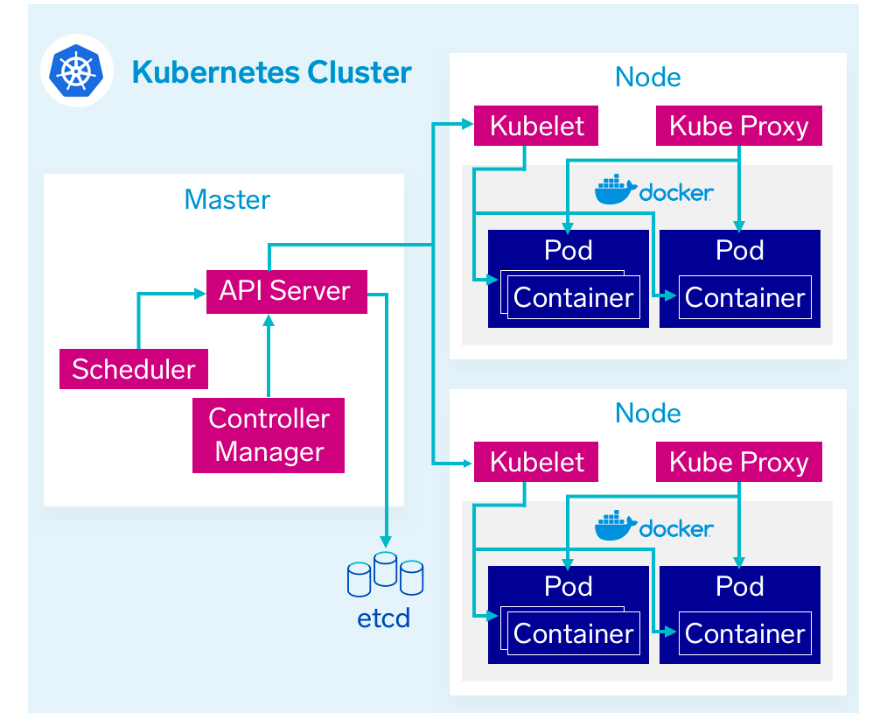

1.2 Architecture of a Kubernetes cluster

- The master node, which hosts the Kubernetes Control Plane that controls and manages the whole Kubernetes system

- Worker nodes that run the actual applications you deploy

- Node

- Pod

2. Kubernetes setup

https://kubernetes.io/docs/setup/

Production environment

- Build Your Own K8S

- Cloud Providers

Learning environment

- minikube

- katacoda(https://www.katacoda.com/courses/kubernetes/playground)

2.1 Minikue

Prerequisites: * kubectl (https://kubernetes.io/docs/tasks/tools/install-kubectl/) * Virtual box (https://www.virtualbox.org/wiki/Downloads)

https://github.com/kubernetes/minikube https://kubernetes.io/docs/setup/learning-environment/minikube/ https://kubernetes.io/docs/tasks/tools/install-minikube/ https://minikube.sigs.k8s.io/docs/examples/

Network issue workaround:

- GCR (Googl Container Registry)

https://github.com/AliyunContainerService/minikube https://yq.aliyun.com/articles/221687

clean up, minikube delete and rm -rf ~/.minikube

Step 1:

curl -Lo minikube https://github.com/kubernetes/minikube/releases/download/v1.5.2/minikube-darwin-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/ |

Or manual download https://github.com/kubernetes/minikube/releases/download/v1.5.2/minikube-darwin-amd64

current work dir change to the downloaded minikube

execute

chmod +x minikube && sudo mv minikube /usr/local/bin/minikube version

Step 2:

`minikube start` Or minikube start --image-mirror-country cn

minikube start --image-mirror-country cn |

Step 3:

minikube dashboard

2.2 IBM Cloud Kubernetes Service (IKS)

https://cloud.ibm.com/docs/containers?topic=containers-cs_cluster_tutorial

Prerequisites: * kubectl * IBM Cloud Account - Account: IBM * IBM Cloud CLI * IBM Cloud CLI Plugin : ibmcloud plugin install container-service

https://cloud.ibm.com/docs/cli?topic=cloud-cli-install-devtools-manually

Verify ibmcloud --version

ibmcloud plugin list

Setup free cluster (30days) https://cloud.ibm.com/kubernetes/catalog/cluster/create

- Step2

ibmcloud login --sso -a cloud.ibm.com -r us-south -g default |

- Step3

ibmcloud ks worker ls --cluster bnt2k66d0he0rv8biarg |

3. Kube Context

https://kubernetes.io/docs/concepts/configuration/organize-cluster-access-kubeconfig/

KUBECONFIG ($HOME/.kube)

list all contexts:

kubectl config get-contexts

Choose current context

kubectl config use-context

view current context

kubectl config current-context |

4. Node

The worker nodes are the machines that run your containerized applications.

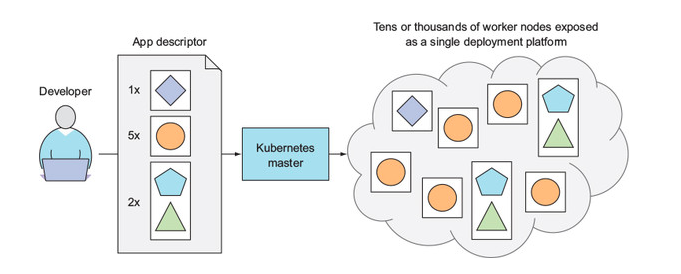

When the developer submits a list of apps to the master, Kubernetes deploys them to the cluster of worker nodes. What node a component lands on doesn’t (and shouldn’t) matter—neither to the developer nor to the system administrator.

To run an application in Kubernetes, you first need to package it up into one or more container images, push those images to an image registry, and then post a description of your app to the Kubernetes API server. Scheduler schedules the specified groups of containers onto the available worker nodes based on computational resources required by each group and the unallocated resources on each node at that moment.

If a whole worker node dies or becomes inaccessible, Kubernetes will select new nodes for all the containers that were running on the node and run them on the newly selected nodes.

Assigning Pods to Nodes

In essence, all the nodes are now a single bunch of computational resources that are waiting for applications to consume them. A developer doesn’t usually care what kind of server the application is running on, as long as the server can provide the application with adequate system resources.Certain cases do exist where the developer does care what kind of hardware the application should run on.

Eg: One of your apps may require being run on a system with SSDs instead of HDDs, while other apps run fine on HDDs.

https://kubernetes.io/docs/concepts/configuration/assign-pod-node/

CMD:

kubectl get nodes -o wide

kubectl describe node ${Nodename}

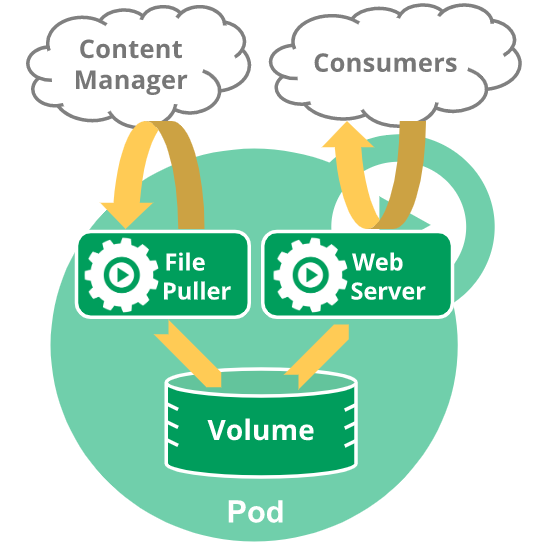

Of all object models in Kubernetes, the pod is the smallest building block.

The inside of a pod can have one or more containers. Those within a single pod share:

- Network: A unique network IP (even lcoalhost loopback)

- Storage/Volumn

- Any additional specifications you’ve applied to the pod

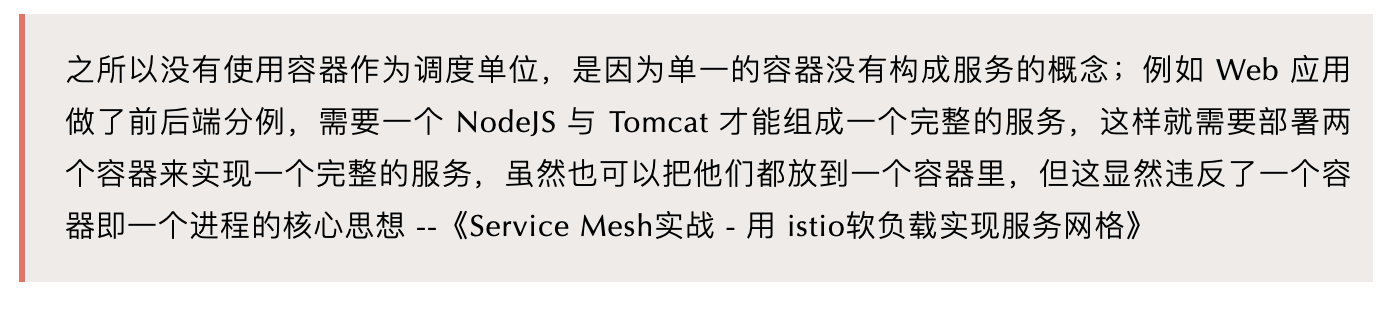

Why Pod?

Pods in a Kubernetes cluster can be used in two main ways:

Pods that run a single container.

Pods that run multiple containers that need to work together.

One or more application containers which are relatively tightly coupled

Side Car Container Eg: side car for papertrail configuration in k8s https://help.papertrailapp.com/kb/configuration/configuring-centralized-logging-from-kubernetes/

A pod is also the basic unit of scaling. If you need to scale a container individually, this is a clear indication that it needs to be deployed in a separate pod.

Basically, you should always gravitate toward running containers in separate pods, unless a specific reason requires them to be part of the same pod.

Ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-overview/ https://www.bmc.com/blogs/kubernetes-pods/

Sample

IMG: zseashellhb/hello-nodejs:1.0 (dockerhub public)

Kubectl run pod: kubectl run hello-nodejs --image=docker.io/zseashellhb/hello-nodejs:1.0

kubectl get pods -o wide |

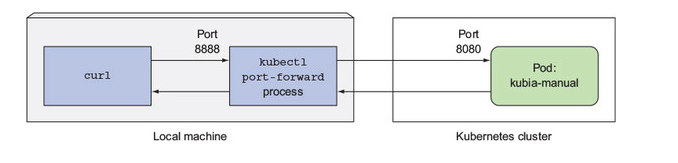

How to Access pod:

Port Forward (Forwarding a local network port to a port in the pod)

|

How to get pod img info

kubectl get po ? -o jsonpath='{.spec.containers[*].name}' |

If your pod includes multiple containers, you have to explicitly specify the container name by including the -c

Cleanup

kubectl delete deployment/hello-nodejs : Deleting deployment, replicaSet, Pod

6. Pod and Node

A Pod always runs on a Node. A Node is a worker machine in Kubernetes and may be either a virtual or a physical machine, depending on the cluster. Each Node is managed by the Master. A Node can have multiple pods, and the Kubernetes master automatically handles scheduling the pods across the Nodes in the cluster. The Master’s automatic scheduling takes into account the available resources on each Node.

7. Reference

Kubernetes 101: Pods, Nodes, Containers, and Clusters Kubectl CheetSheet